Image credit: Pixabay

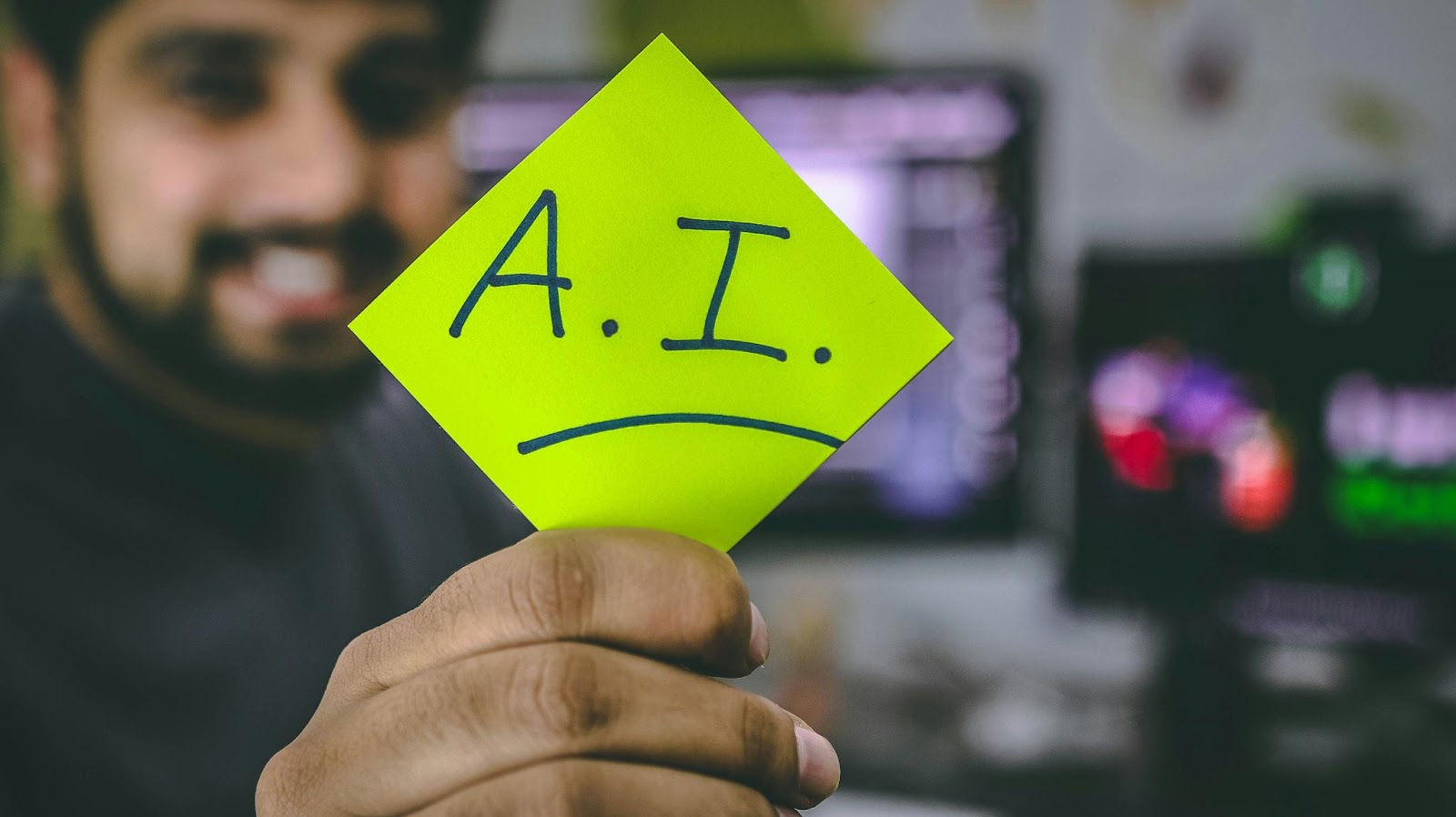

Artificial intelligence is no longer confined to laboratories or research institutions. It lives in our phones, our workflows, our shopping habits, and even our choices about who we trust. As AI seeps into nearly every corner of life, ethical questions have shifted from academic exercises to practical, everyday concerns. Data misuse, algorithmic bias, and lack of transparency have become urgent issues, and the conversation around responsible AI has evolved from theory into necessity.

But a growing wave of tech companies is building a new playbook that joins innovation with integrity. Across sectors from consumer research to property law to fintech, these leaders are designing systems that learn from people rather than exploit them, and that make empathy, privacy, and accountability central to progress.

Building AI That Reflects Real People

The industry’s shift from scraping vast, impersonal datasets to engaging directly with real human experience marks a profound change in how AI learns. Vurvey Labs, for example, is redefining the data pipeline by training AI on first-person video interviews. These are authentic human stories in motion.

“We wanted to figure out how to interview the world,” says Chad Reynolds, founder and CEO of Vurvey Labs. “If you could co-create with hundreds of thousands of consumers, powered by their voices rather than static slides, imagine how much better we could innovate.”

Reynolds’ philosophy goes beyond sentiment and has become structural. Vurvey’s systems train AI models using the lived experiences of real people, which allows the machine to understand not only what consumers think, but why. Reynolds explains, “The internet documents our accomplishments and how we want to be seen. It doesn’t reflect who we really are.”

By rooting AI in authentic human data rather than the noisy sprawl of the web, Vurvey addresses one of the field’s core ethical problems: empathy without exploitation. The result is an AI that listens first and computes second. That inversion of priority, subtle but powerful, makes the technology feel more like a collaborator than a mirror.

Human-in-the-Loop Systems in High-Stakes Industries

In sectors such as real estate and law, the moral implications of AI extend beyond bias, and into trust. Andrea Monti Solza, co-founder of Conveyo.io, calls this “the trust problem.” His company digitizes property transactions in the UK, where deals still take months despite fully digital records.

“Real estate has always been built on reputation,” Solza explains. “Lawyers and agents aren’t the first to jump on new tech. We had to earn trust by proving the system works and protects clients.”

Conveyo does the heavy lifting in streamlining processes, and in effect, empowers professionals. Its automation engine manages legal workflows but keeps lawyers firmly in control. “The technology doesn’t replace the lawyer,” Solza says. “It gives them a complete, digitized case file so they can focus where their expertise is really needed.”

This approach, automation guided by human oversight, strikes a careful balance between efficiency and accountability. In Conveyo’s system, transparency itself becomes an ethical safeguard. “Once you make a process more transparent, you can see who’s doing their job and who isn’t,” Solza adds.

The company’s success rests not on flashy promises but on re-earning public confidence. “You can be the most competent solicitor in the world,” he says, “but if you’re still using the horse instead of the car, you’re slowing everyone down.”

Guardrails for SMBs and Ethical Use

While global enterprises can afford AI compliance departments, small and mid-sized businesses often cannot. The team at SuperWebPros, led by Jesse Flores, helps smaller firms implement AI tools safely and sensibly without losing sight of human judgment.

Flores’ background blends software engineering with theology, giving his view of AI ethics an unusual perspective. “All tools should serve humans,” he says. “There are common values that all people both embrace and deserve by right. That’s dignity, responsibility, freedom.”

For Flores, AI ethics is posture. He has seen what happens when businesses adopt AI systems without understanding their implications. “Many small companies don’t think about governance,” he notes. “Too many people have too much access to sensitive information. It’s not always malicious, but it’s neglect.”

When asked how he defines ethical AI for smaller firms, Flores offers a simple but resonant rule: “If you’d feel shame explaining your work to your grandma, it’s probably not ethical.”

That litmus test, equal parts humor and humanity, reframes the ethics conversation in terms anyone can grasp. SuperWebPros does not talk about compliance. It talks about conscience.

AI With a Conscience: Marketing and Loyalty

Ethical responsibility doesn’t stop at automation or data handling, but also extends into how AI interacts with consumers. Get Kudos, a platform that helps small businesses retain customers through SMS marketing, integrates oversight directly into its systems.

CEO Izhak Musli explains, “We’re adding an AI overseer to flag unethical promotions. Responsible AI means education and protection, not just automation.”

Musli’s understanding of technology is both technical and personal. “We say ‘personal touch,’ but when we think technology, we really mean ‘targeted,’” he says. “A computer can mimic natural language and even humor, but it isn’t your friend. It’s not truly personal.”

That realism fuels Get Kudos’ mission. The company integrates oversight directly into its systems, embedding an AI overseer that flags unethical promotions or manipulative messaging. “Responsible AI means education and protection, not just automation,” Musli explains.

He envisions a future where service, not code, defines value. “We’re moving from software as a service to service as software,” he says. “The real innovation will come from helping people use technology ethically, not just selling them another app.”

In a market that prizes speed and scale, Get Kudos’ careful, human-centered approach feels almost rebellious. It is a reminder that respect for the customer can be its own form of intelligence.

Privacy and Compliance at the Core

Nowhere is the tension between innovation and ethics more visible than in fintech. PepperMill approaches AI with a compliance-first mindset, integrating privacy safeguards from the outset.

PepperMill’s systems operate on top of more than 200 large language models, but the company eliminates any that display bias or hallucinate unreliable data. “LLMs are designed to give you an answer even if they don’t have a good one,” CEO Anh Hatzopoulos explains. “So we rerun things, test for reasoning, and ground outputs in verified data.”

Internally, the company uses what Hatzopoulos calls a “shift-left” approach, starting with compliance from day one rather than retrofitting it later. “You start with governance. You don’t do compliance after the fact,” she says.

Her greatest concern is what she calls shadow AI: employees using public tools without realizing the risks. “Not out of malice,” she says, “but because people just want to get things done. That’s how enterprise value walks out the door.”

PepperMill prevents that by keeping all data inside a secure environment. “Not a single piece of client data that runs through our system is ever used for training. No exceptions” Hatzopoulos notes.

In her view, real progress in AI depends on the courage to connect ethics with enforcement. “Policy without enforcement doesn’t work,” she says. “Machines can’t be accountable. People and organizations must be.”

The Road Ahead: Empathy, Transparency & Oversight

As AI continues influencing decision-making across sectors, the ethical frameworks guiding its development will determine its long-term societal impact. Building ethical AI is not an abstract ideal but a practical, achievable reality.

The collective efforts of these responsible tech companies are paving the way toward a future where organizations will not depend on how intelligent machines become, but on how human their creators choose to remain.